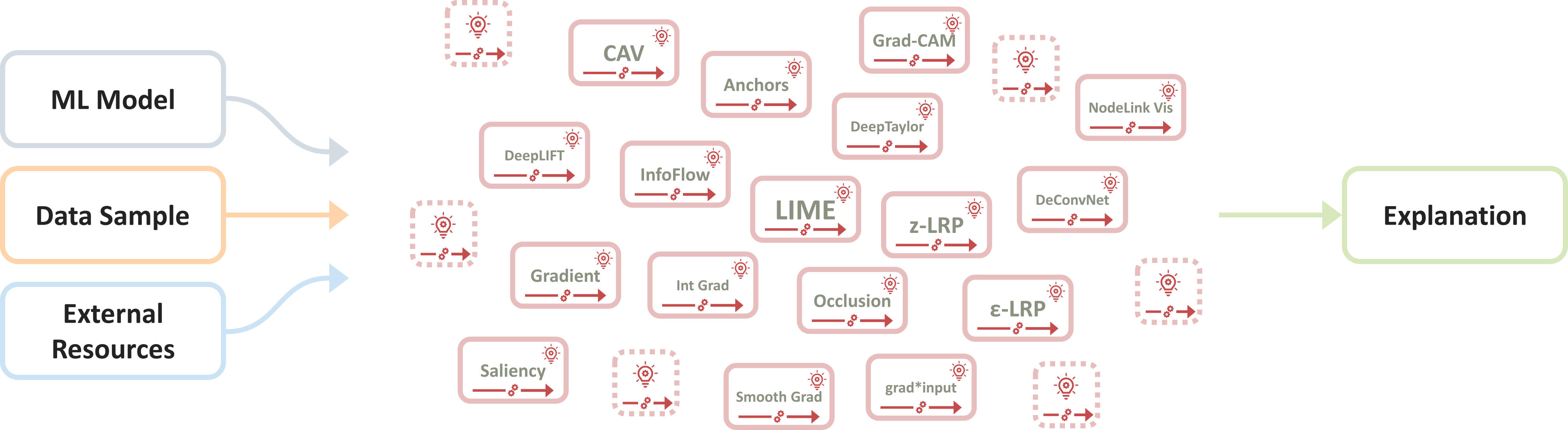

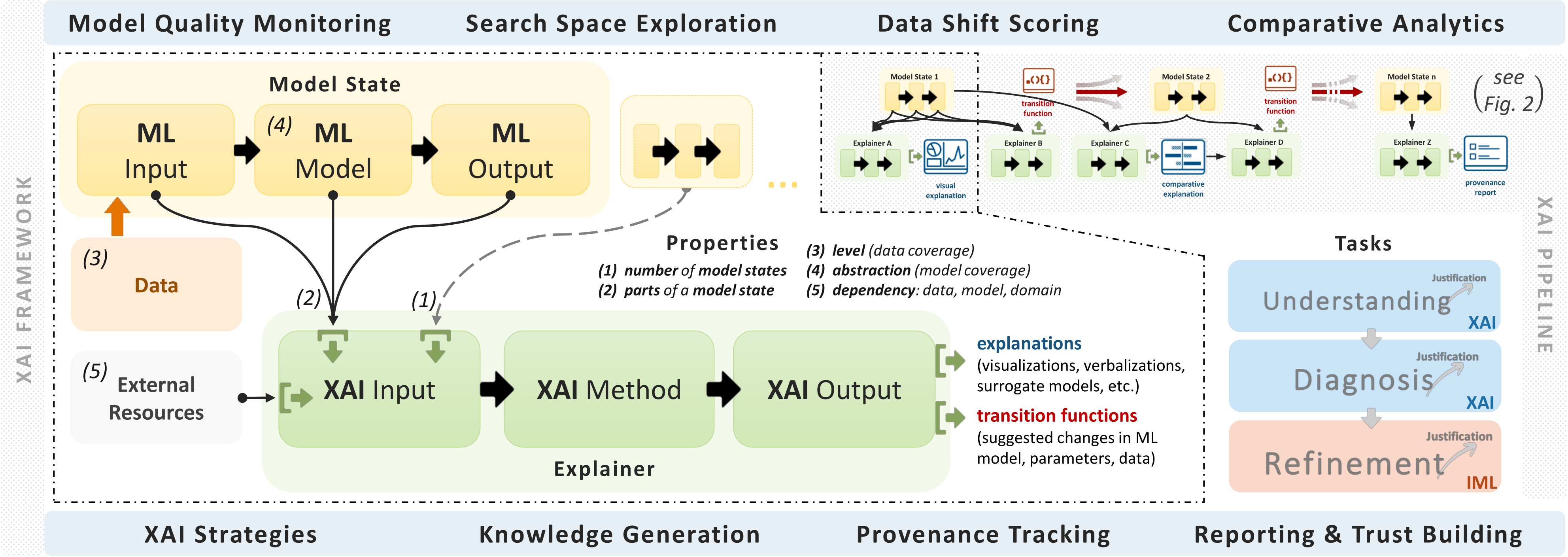

The recent abundance of artificial intelligence and the resulting spread of black-box models in critical domains has lead to an increasing demand for explainability. The field of eXplainable Artificial Intelligence tackles this challenge by providing ways to interpret the decisions of such models. Which, in turn, resulted in a variety of different explaining techniques, all having various dependencies and providing highly diverse outputs.

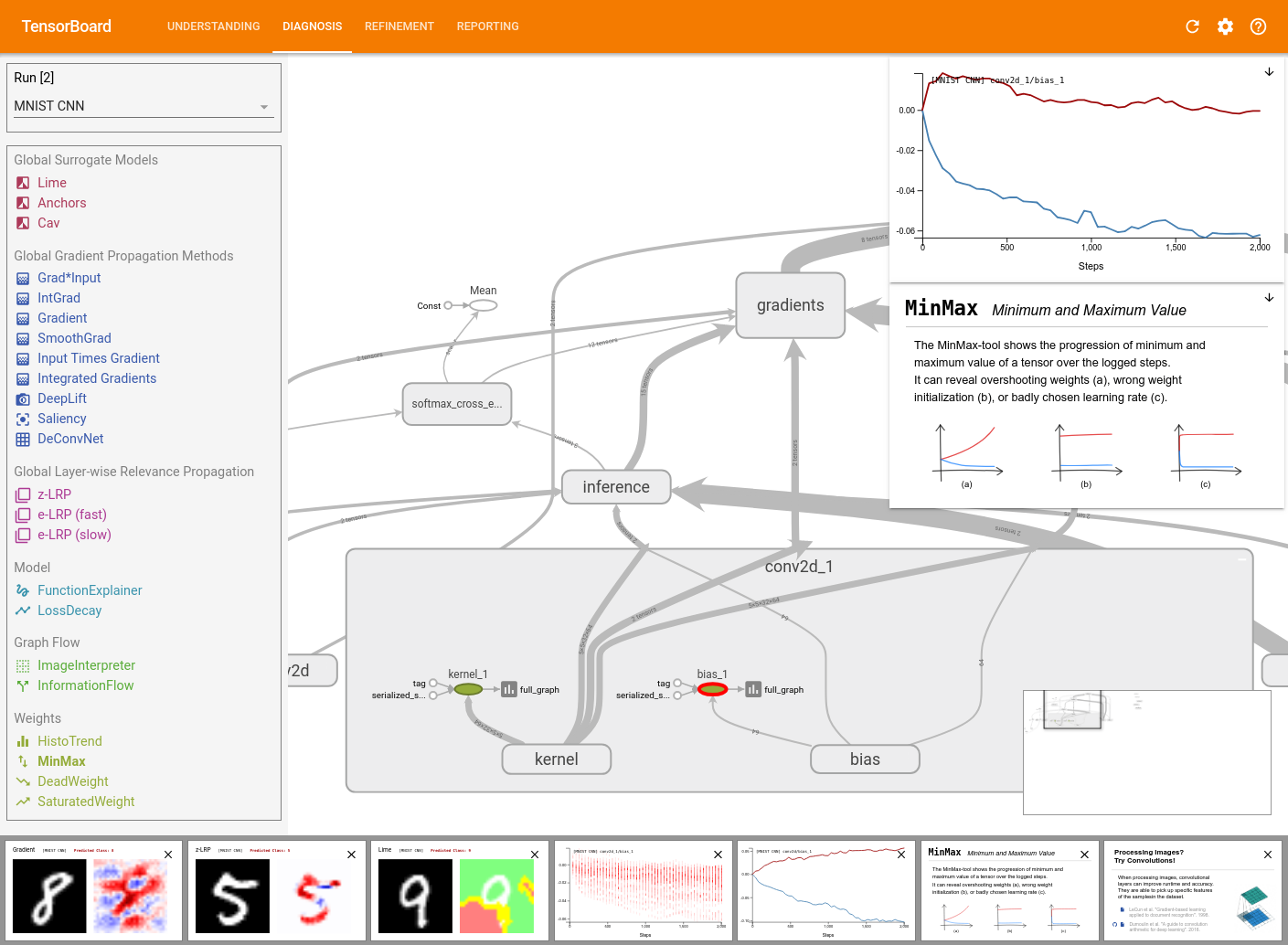

The framework for explainable AI and interactive machine learning. Making XAI accessible.